- /

-

Measuring Social and Emotional Learning: The 6-D Approach

Measuring Social and Emotional Learning: The 6-D Approach

Social and emotional learning (SEL) is the process of acquiring skills to identify, label, express, and regulate emotions. Since the last decade, educators have acknowledged its significance and incorporated SEL interventions in schools as a preventive measure. These interventions are being implemented in schools worldwide, but it is crucial to evaluate their effectiveness. By assessing the outcomes, educators can make informed decisions and ensure that these interventions effectively promote emotional intelligence and positive social interactions.

To illustrate the significance of measurement, consider this analogy: Imagine baking a cake for the first time, you add the ingredients without using measuring cups and bake at a random temperature. Finally, you serve the cake without even tasting it. Do you think the cake will taste as planned? Just as baking a cake requires precise measurements and careful evaluation, social and emotional learning interventions must be planned, implemented, and assessed to determine their effectiveness.

However, measuring social and emotional learning poses several challenges due to the multifaceted nature of the concept. The absence of a universally agreed-upon framework and the diversity of definitions make it difficult to establish standardised measurement tools. Additionally, SEL development varies across age groups, necessitating age-appropriate assessment strategies. These complexities of social and emotional learning, including its multifaceted nature, lack of standardized framework, diverse definitions, and age-specific variations, require ongoing research, collaboration, and the development of valid and reliable measurement instruments that capture them accurately.

So, how do we measure Social and emotional learning?

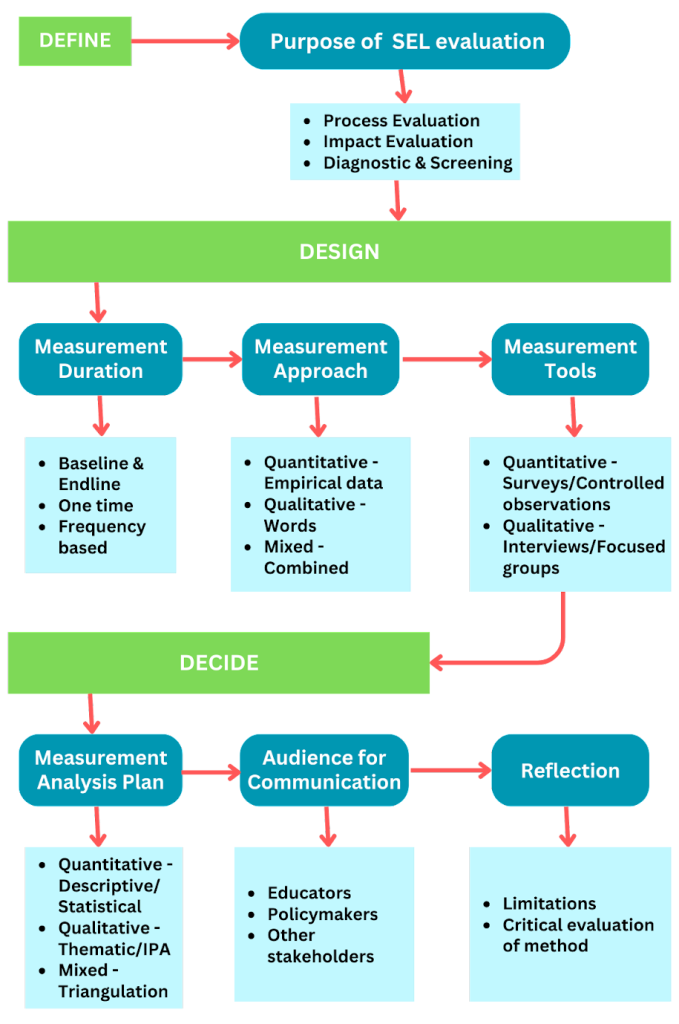

- Define the purpose of SEL evaluation:

It is important to decide what you want to measure. Researchers typically, measure the following:

- Process evaluation- This evaluates whether an intervention is being implemented as planned. Data is collected during the intervention. It evaluates the implementation and delivery of the intervention.

- Impact evaluation- This evaluates whether an intervention is having the intended outcome. Data is collected at the beginning and the end of an intervention. It is a robust research study to adopt an evidence-based approach to the interventions. It evaluates whether the intervention has an impact on outcomes such as participants’ attitudes, behaviours, or overall school climate.

- Assessing diagnostic and screening- This evaluates the social-emotional competence of a child. Data can be collected at any time point. It helps understand individual dimensions of social and emotional learning, to plan an intervention, curriculum, or training.

- Design the timeline of measurement.

Setting a timeline for data collection is crucial. A common research practice is to conduct baseline and end-line assessments. Baseline assessment occurs before the intervention begins, providing a starting point for comparison. Endline assessment takes place after the intervention has been implemented. By collecting data at both time points, you can evaluate the impact of the intervention over time and determine whether it has achieved the desired outcomes. It is crucial to ensure that the same population is evaluated at both baseline and end-line to maintain consistency in the evaluation process. Alternatively, researchers can choose to collect data once only or at different time intervals based on their research objectives. One-time evaluations are often used to assess participants’ social-emotional competence, while different time intervals allow for monitoring of the intervention over time.

- Design the approach of measurement.

Quantitative methods address “how much” research questions by collecting empirical data on variables of interest, to measure and test a phenomenon. Data is collected via self-report measures like surveys or direct observations in controlled settings. For example, surveys with Likert scales or observation tools may predict the impact of an empathy-based intervention on classroom bullying. Alternatively, Qualitative methods, address “why” research questions by collecting observation-based data to interpret phenomena. Data is collected via semi-structured interviews, focus groups, and field observations. For instance, conducting individual interviews with parents, teachers, and students can provide insights into their experiences and perceptions. Finally, Mixed methods combine both quantitative and qualitative approaches to achieve comprehensive findings and understand complex phenomena. Researchers collect both types of data and use triangulation to combine and compare findings, fostering a holistic understanding. For instance, to investigate the effectiveness of an empathy-based intervention in reducing bullying and exploring subjective experiences, a mixed-method approach is valuable.

- Design the tools for measurement:

Researchers can explore and adapt existing tools relevant to the measurement of SEL variables. The selection of tools should consider the demographic characteristics of the participants such as age, gender, and socioeconomic status. Examples of commonly used quantitative SEL tools include the Social-emotional well-being tool, Life Skills Assessment Scale, and Social Skills Improvement System Rating Scale. Common qualitative SEL tools include the Classroom Assessment Scoring System, or developing semi-structured interview schedules and focus groups.

- Decide the analysis plan for the measurement.

Once the data has been collected, it is crucial to establish an analysis plan tailored to the research question at hand. For quantitative data, options include descriptive analysis to summarize the measured data and statistical analysis to identify trends and explanations for phenomena. On the other hand, qualitative data analysis methods such as thematic analysis or can provide rich and nuanced insights. The thematic analysis involves identifying and organizing patterns or themes within the data, while interpretive phenomenological analysis focuses on understanding the lived experiences and meanings associated with a particular phenomenon. By selecting an appropriate analysis method, researchers can delve deeper into the data, uncovering valuable insights that contribute to a comprehensive understanding of the research topic.

- Decide the audience for communication.

Once the data analysis is complete, it is crucial to identify the intended audience for the data evaluation. This audience could consist of policymakers, educators, funders, or other relevant stakeholders. Understanding the target audience helps shape the writing style and approach of the report, ensuring effective communication. Additionally, it is essential to clearly communicate the purpose of the evaluation to the audience and outline the expected changes or impacts resulting from the evaluation. This ensures that the evaluation findings are effectively conveyed and understood, fostering meaningful action and decision-making.

- Reflection

After completing the assessment, the researcher should reflect and assess the limitations of the research. Critically evaluating the sample size, data collection process, potential measurement errors, missing data, ethical considerations, and external factors influencing the research enhances the robustness of the research.

Conclusion:

- It is important to measure social and emotional learning before introducing interventions, curricula, or training in schools.

- Measuring social and emotional learning before implementation helps determine levels of SEL competency and design appropriate approaches.

- The process includes deciding what to measure, setting timelines, choosing the approach and tools, and reflecting on research limitations.

About the Authors

Ms Aakanksha Agrawal is the Associate Manager of Research and Impact at Dream a Dream, Bangalore. She is a passionate researcher and educator with the aim to promote mental health in the Indian education system. Her research interests are social and emotional learning, psychology of education, and well-being.

LinkedIn: https://www.linkedin.com/in/aakanksha-agrawal-04916313b/

Twitter: @aakanksha_sel

Dr Sreehari Ravindranath is the Associate Director of Research and Impact at Dream a Dream, Bangalore. He is a passionate researcher and educator with a mission to reimagine education in India. He is specialized in pedagogies of social and emotional learning and well-being, education in emergencies, and thriving in Adversity.

LinkedIn: https://www.linkedin.com/in/sreehari-ravindranath/

Twitter: @sreehariRavind3